We will continue to look into file ingestion , modern files like parquet, ORC, Avro. We already discuss the different file types including these modern ones with their comparative study if you missed it here is the link -

For this exercise purpose I am using below flow:

Image by Author

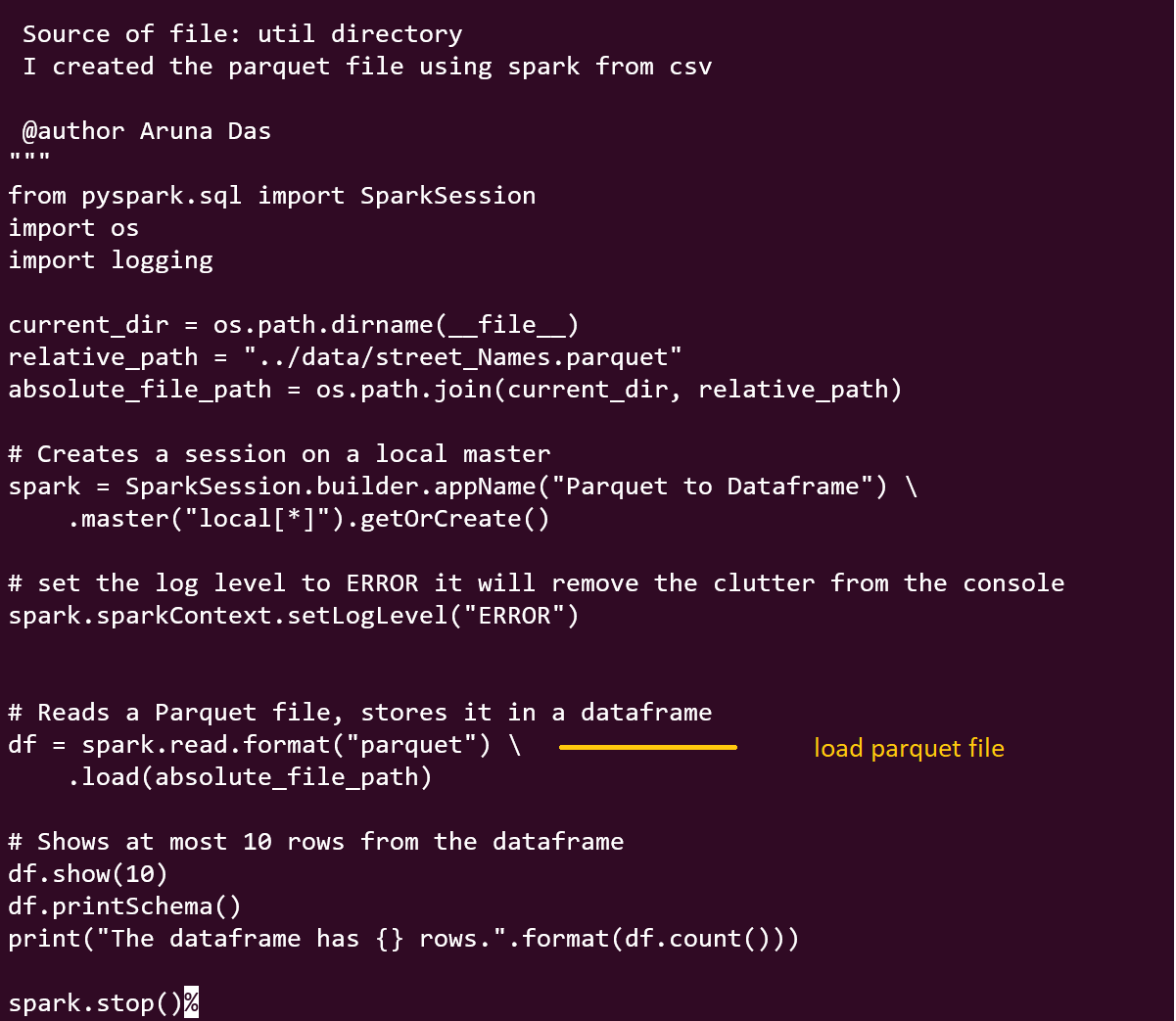

I used a util spark code to convert csv files into avro, ORC and parquet for this exercise purpose and then again spark code to read those files and display on console. All the code can be found in the github location -

These modern file formats address the shortcoming of existing file format specially in splitting , compression , schema evaluation, row vs column preference in distributed computing set up. In Distributed computing set up with big data you will find these file formats because of their efficient storage and processing, which are crucial for keeping the cost under control from both processing(reading) and storage cost involved in these systems.

- Parquet Parser: Spark natively supports the Parquet file format, which is a columnar storage format optimized for big data processing. Spark’s Parquet parser enables efficient reading and writing of Parquet files

Image by Author

Output

Image by Author

2. ORC Parser: Spark also supports the ORC (Optimized Row Columnar) file format, which is another columnar storage format optimized for analytics workloads. The ORC parser in Spark allows you to read and write ORC files.

Image by Author

Output

Image by Author

3. Avro Parser: Spark provides support for the Avro data serialization format. The Avro parser allows you to read and write Avro data, which is self-describing and schema-based.

To ingest avro file You will need to add a library , as Avro is not natively supported by spark. I am using this version org.apache.spark:spark-avro_2.12:3.2.1 once you installed it the parser will work but you will have to pass the package as argument to your spark-submit.

bin/spark-submit — packages org.apache.spark:spark-avro_2.12:3.2.1 /home/aruna/spark/app/article/SparkSeries/SparkSeries5.1/avroToDataframe.py

Image by Author

Output

Image by Author